Sources:

Recent research into user perceptions of large language models (LLMs) reveals a significant disparity in how intelligence and experience are attributed to these AI systems, impacting trust and advice-taking behaviors.

Users generally ascribe higher intelligence-related traits to LLMs, with an average attribution score of

59.39 (SD = 24.99), compared to a much lower attribution for experience-related traits, averaging

12.25 (SD = 19.06). Experience here refers to mental states such as emotions and consciousness.

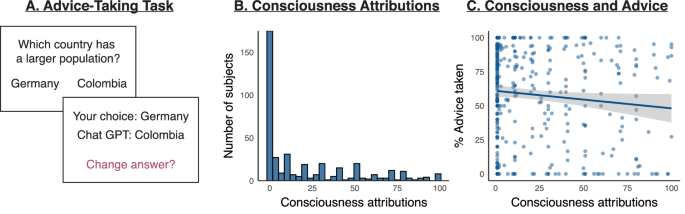

This distinction is critical because it influences how much users trust and follow advice from LLMs. Bayesian analyses showed

strong evidence against a positive correlation between consciousness attribution and advice-taking. In fact, experience-related mental state attributions were negatively related to advice-taking, suggesting that perceiving LLMs as having emotions or consciousness may reduce trust.

Conversely, intelligence-related attributions enhanced trust, indicating that users are more likely to rely on LLMs when they perceive them as intelligent rather than experiential.

As AI systems continue to evolve, understanding these nuanced perceptions is vital for developers and communicators aiming to foster appropriate trust levels. The findings highlight the importance of framing AI capabilities in terms of intelligence rather than experiential qualities to maintain user confidence.

"Measurements of various mental state attributions revealed that attributions of intelligence-related traits enhanced trust, whereas experience-related traits tended to reduce trust.""Bayesian analyses revealed strong evidence against a positive correlation between attributions of consciousness and advice-taking; indeed, a dimension of mental states related to experience showed a negative relationship with advice-taking."These insights provide a foundation for future AI-human interaction research and practical applications in AI design and communication strategies.

Sources:

Users tend to attribute higher intelligence than experiential qualities, such as emotions or consciousness, to large language models (LLMs), influencing their trust levels. Intelligence-related traits increase trust, while experience-related traits decrease it, with studies showing a negative link between consciousness attribution and advice-taking.